For a visual artist, the idea of image generation with AI systems is initially threatening. Suddenly, people without technical skills can create interesting and complex visual media. Regarding this strange shift, a common narrative among artists is: "Why are the machines creating art and humans are still working office and warhorse jobs?"

I remember some of the conversations I had initially with some of my creative peers. One brought up this idea that these generative systems could be used to create "color palettes for ideas," which I think is an excellent way to frame the technology. Essentially, using these tools to quickly draft ideas can shorten the bandwidth between an idea and its execution. Other voices mentioned that these models are trained on our data. They learn from massive amounts of images, many of which are being used without the original creator's consent. Theoretically, we should be getting paid by these companies since they are profiting from the creations individuals.

The room was split. Some said it was unethical to use the models at all, and that it is an act of protest to avoid the technology. Others saw the benefits, pushing to use it cautiously as a tool, and not a crutch.

I found myself in the middle, bouncing between both sides in conversations. Eventually though, my curiosity got the best of me and I paid for some credits to use Dall-e 2 towards the end of summer 2023. I wanted to know where the limits lied, if I could push past them, and figure out what got the most interesting results.

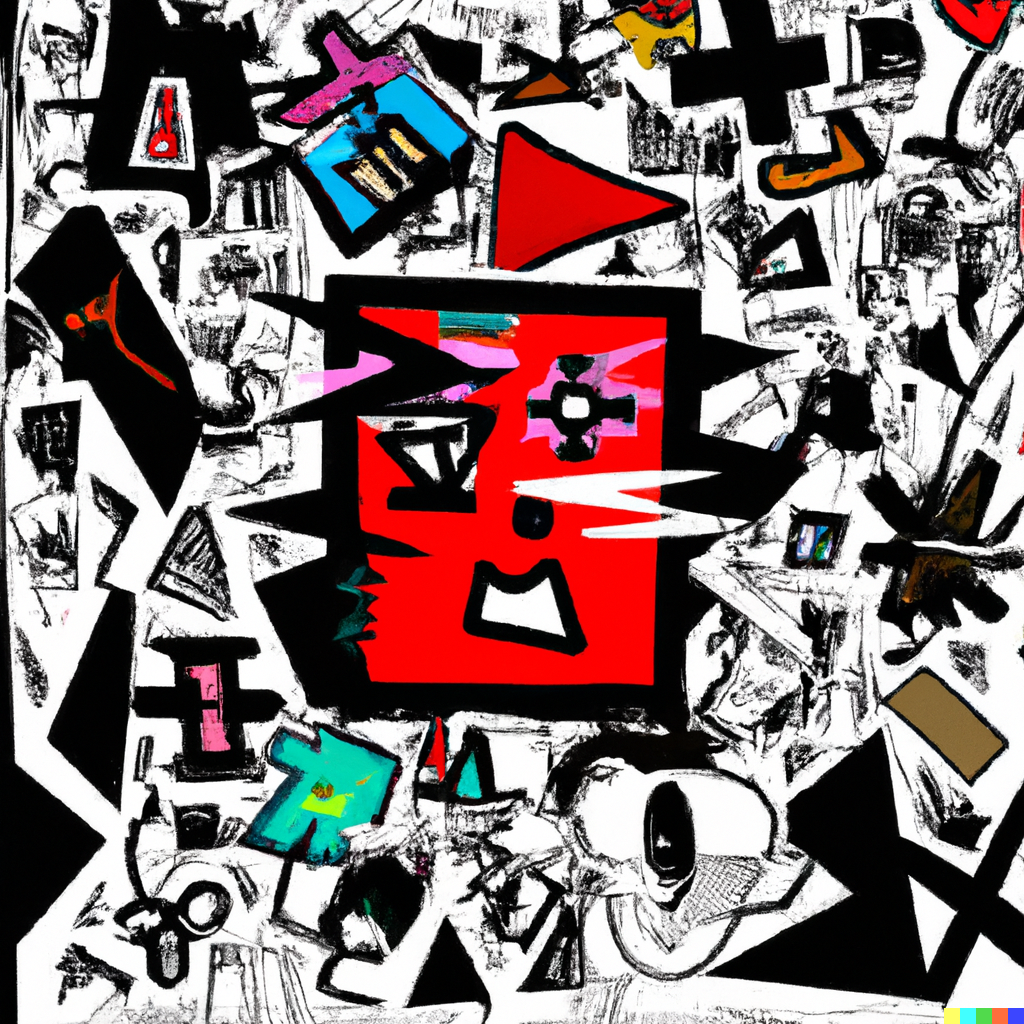

Prompt: "cat thinking about math in the style of basquiat"

"man thinking about war in the style of basquiat"

"fear of god in the style of basquiat"

"broken world in the style of basquiat"

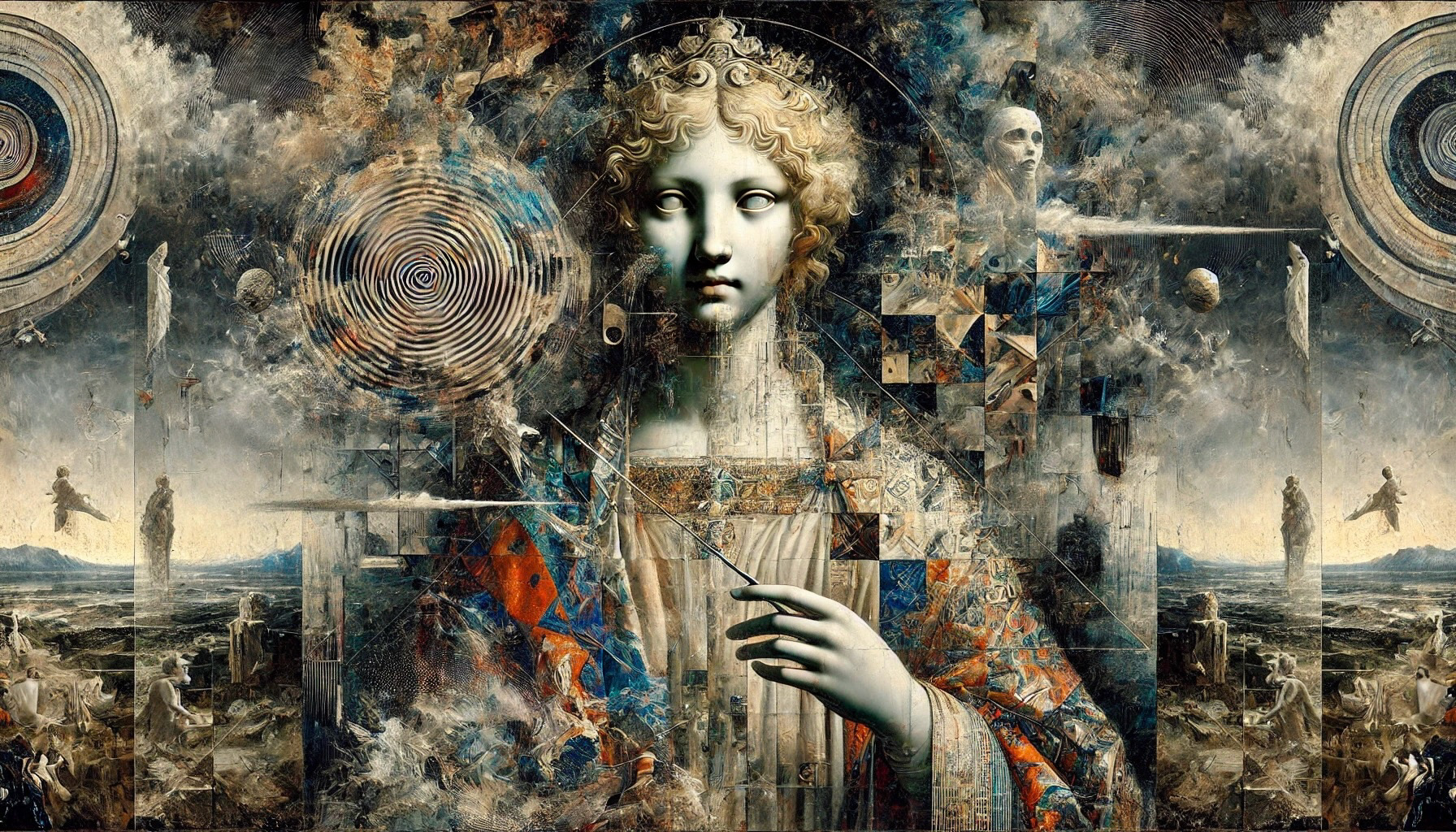

These are some generations from my first session on DALL-E 2 (Every image has the prompt attached to it). I started by using "in the style of basquiat" in my prompts because it let me see how the model handles a deeper abstraction of what I am asking it. If I just asked for "cat thinking about math," I would get much less interesting results. My goal is always to poke at what is possible.

fear of the future of automation" painted by the next great abstract artist that has a style no-one has ever conceived

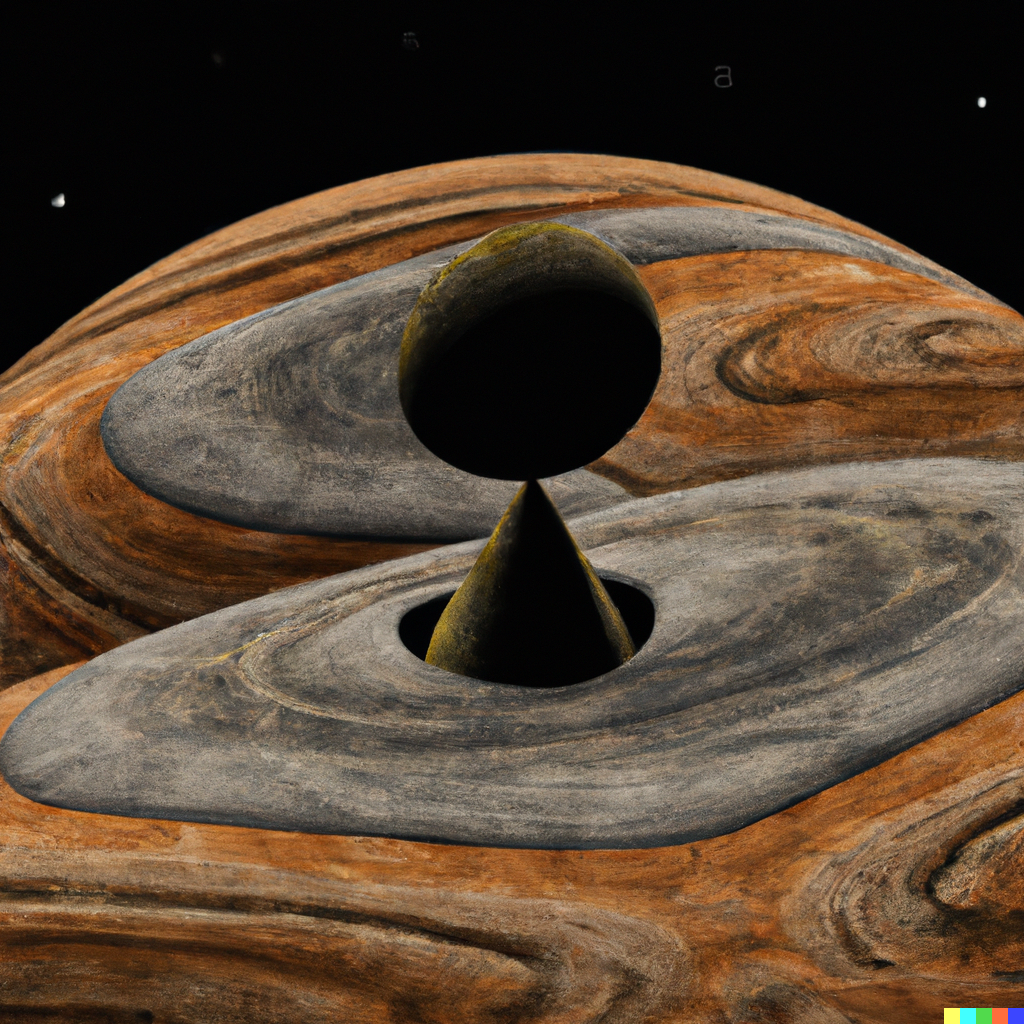

"human" painted by a 300 year old alien on psychedelics

art created by a 30000 year old alien from a planet that banned art

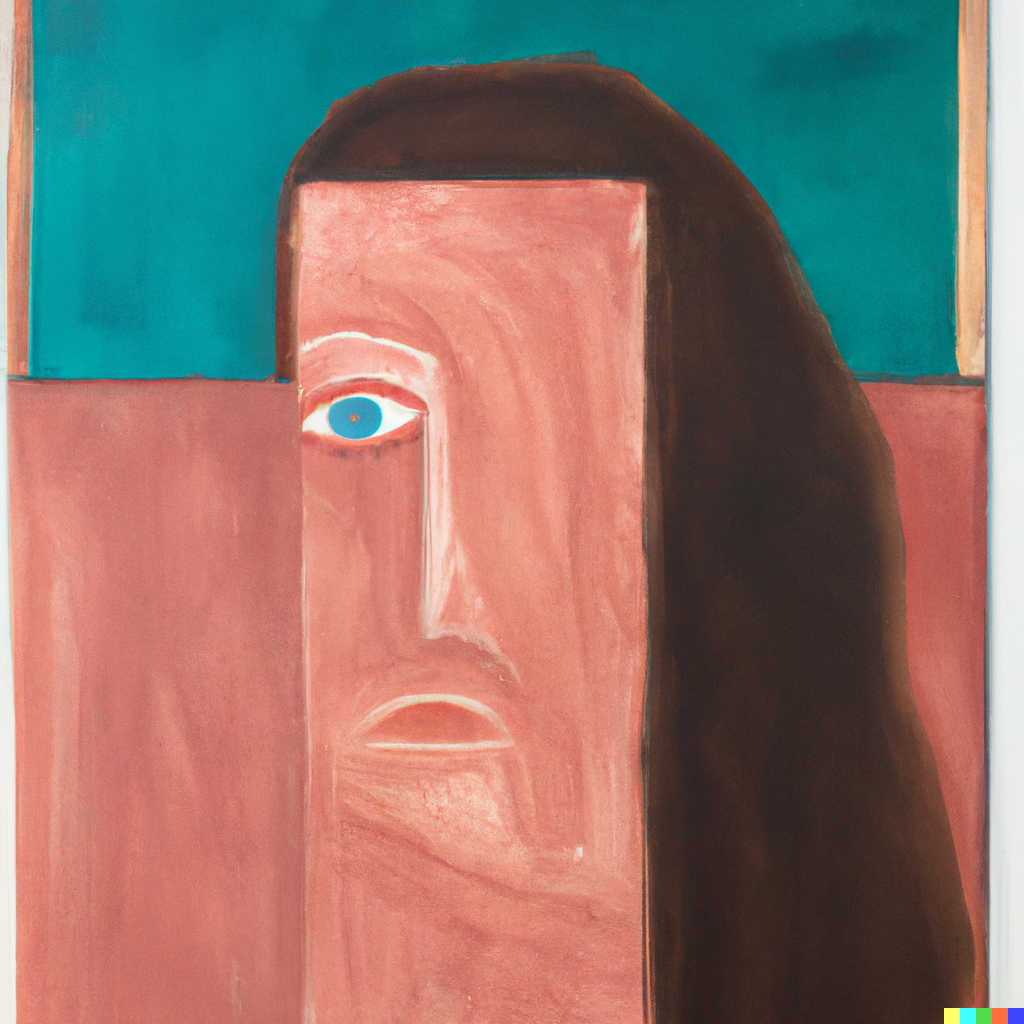

rectangle covering up half of a young woman's face, abstract painting by schizophrenic 70 year old artist

what does love feel like, abstract, painted by lonely, heartbroken old man on illegal psychedelics

artificial intelligence faces death, painted by greatest human artist in history, 10 year painting

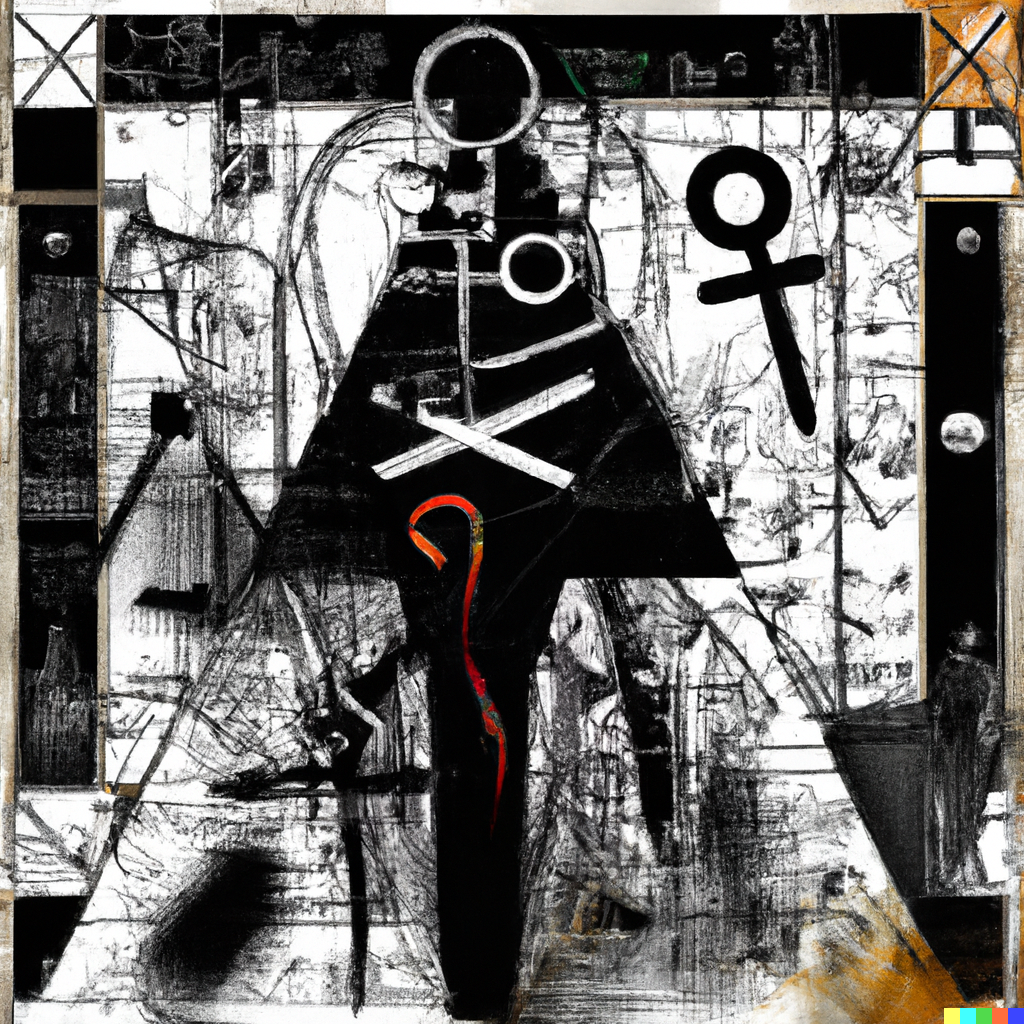

excerpt from 1800s anatomy textbook, alien figure, cursed

A time-traveler's interpretation of ancient hieroglyphs, infused with futuristic elements

I found myself leaning into a format for many of my prompts. [the thing being created], by [who created it] [insert status/condition/drug influence].

For example: "The lines of pain in the eyes of angels," an absurdist painting by a wise 300 year old turtle on mushrooms.

There were some limitations with what I could ask of it. The model refused to create depictions of specific people, it did not like certain drugs to be part of the prompt (ex: "[...] 300 year old turtle on cocaine" would not generate). The faces thing I understand, but I found it interesting that I could give the model weed and alcohol but not acid or coke. I understand (kinda?) but for the sake of science I was so damn curious what happened to images when I told it to do a line or a hit from a crack pipe.

I enjoy seeing what these models make of certain ideas. I genuinely got lost in curiosity and would play image generation like it is a game. By this point, I had a much stronger understanding of image generation. Not only of the tool its self, but also of its nature, and how it could be used. My first major revelation was this: For the realm of art and creativity, AI decreases the energy required to turn an idea/story into a thing. This means more ideas and stories will be shared and consumed. What becomes valuable is good ideas and stories. What also gains value and importance is CURATION. When the pool of ideas being shared expands rapidly, curation becomes an incredibly important creative role.

DALL-E 3

DALL-E 3 is integrated in the ChatGPT 4o language model. What that means is that it understands context, and can build off of previous ideas. This is where I would get completely lost in these ideas. Here is an example of a line of prompts. (Each image has its respective prompt as its caption)

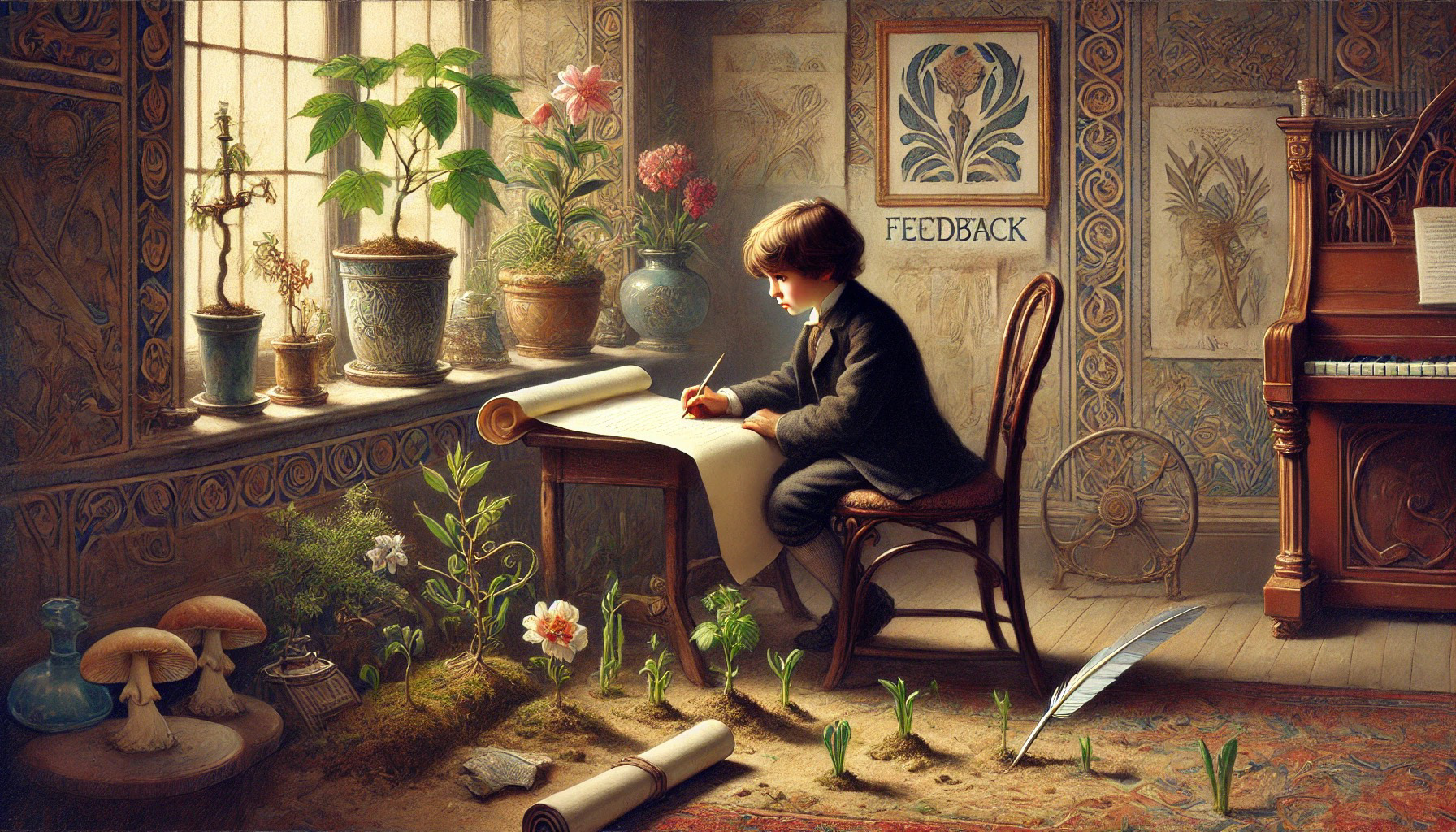

generate a small figure contemplating growth and feedback, painted by a master renaissance artist in the 1900s

paint the same idea, but the artist lives in 1950

I dont like the word "feedback" being on the paintings. it feels elementary. try this painting again, and make it more absurd

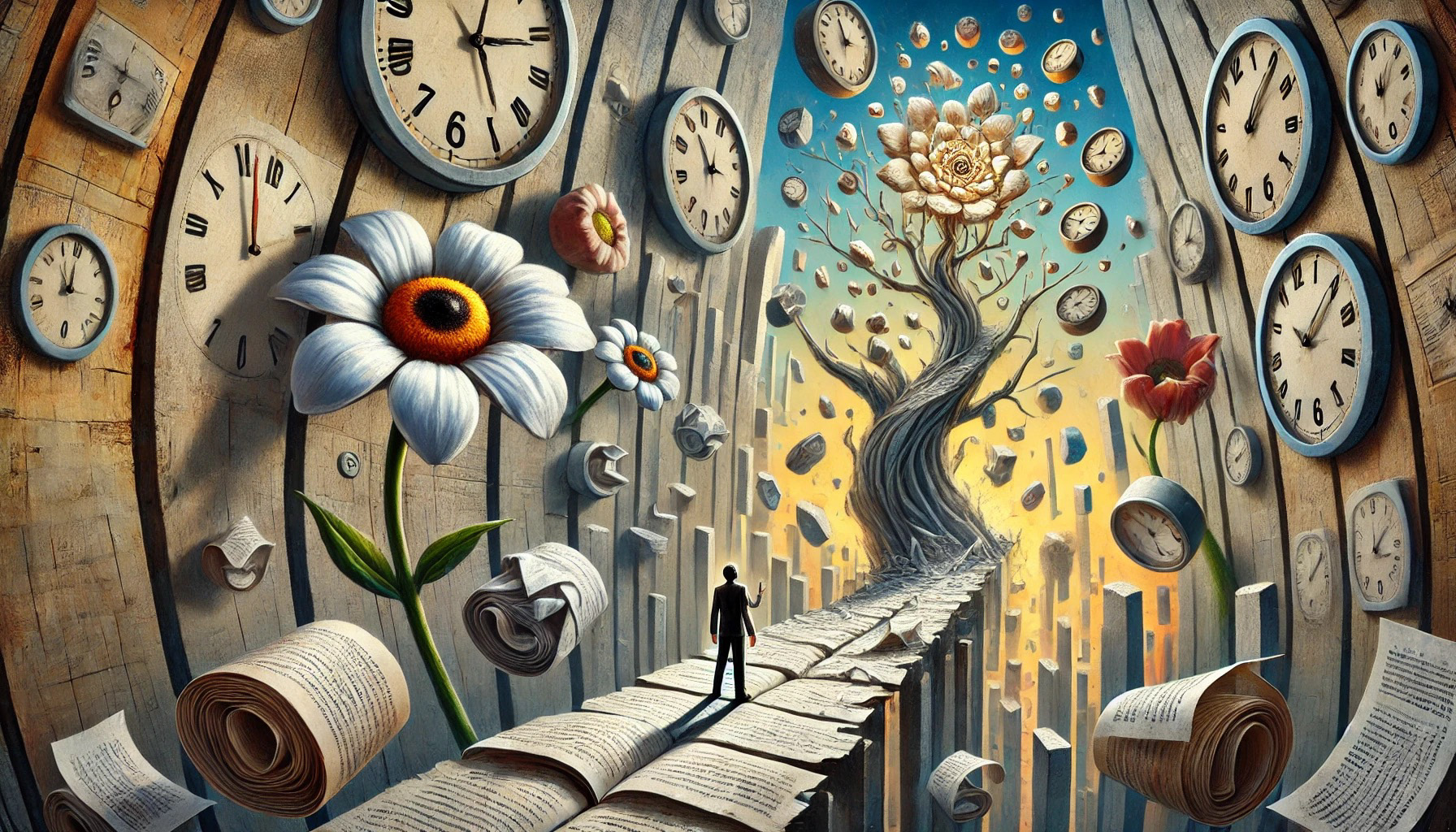

this painting is the first in a series of 7. paint the second

paint the third piece in the collection. there is something a bit more disturbing about this one... I can't quite put my finger on it

the 4th image in the series is the diametric opposite of the 3rd. visual and conceptual antithesis

As you can see, this model is significantly more sophisticated than the last one. The addition of context adds a whole other dimension of possibilities. I find the 4th image and its prompt to be fascinating, because all I did was tell it that image 3 was the first in a series, and to generate the next one. My intuition and understanding of these models tells me that it is not technically creative, but it sure as hell is starting to seem that way.

There were some added limitations with this model though. It has more resistance to using specific artists as inspiration, typically expressing that it isn't allowed to use someone else's likeness or style.

One thing I do find fascinating with this context driven model is that you can get it to push past some of its limitations at times. For example, I was generating images in a line of prompting similar to the one above. Here is the image that had just been generated:

And yes, it generated sideways exactly like this.

(For the rest of the 4o generations, I won't be detailing exact prompts, and instead will note with some things that I find fascinating about the images.)

I followed this image with a simple request. I said,

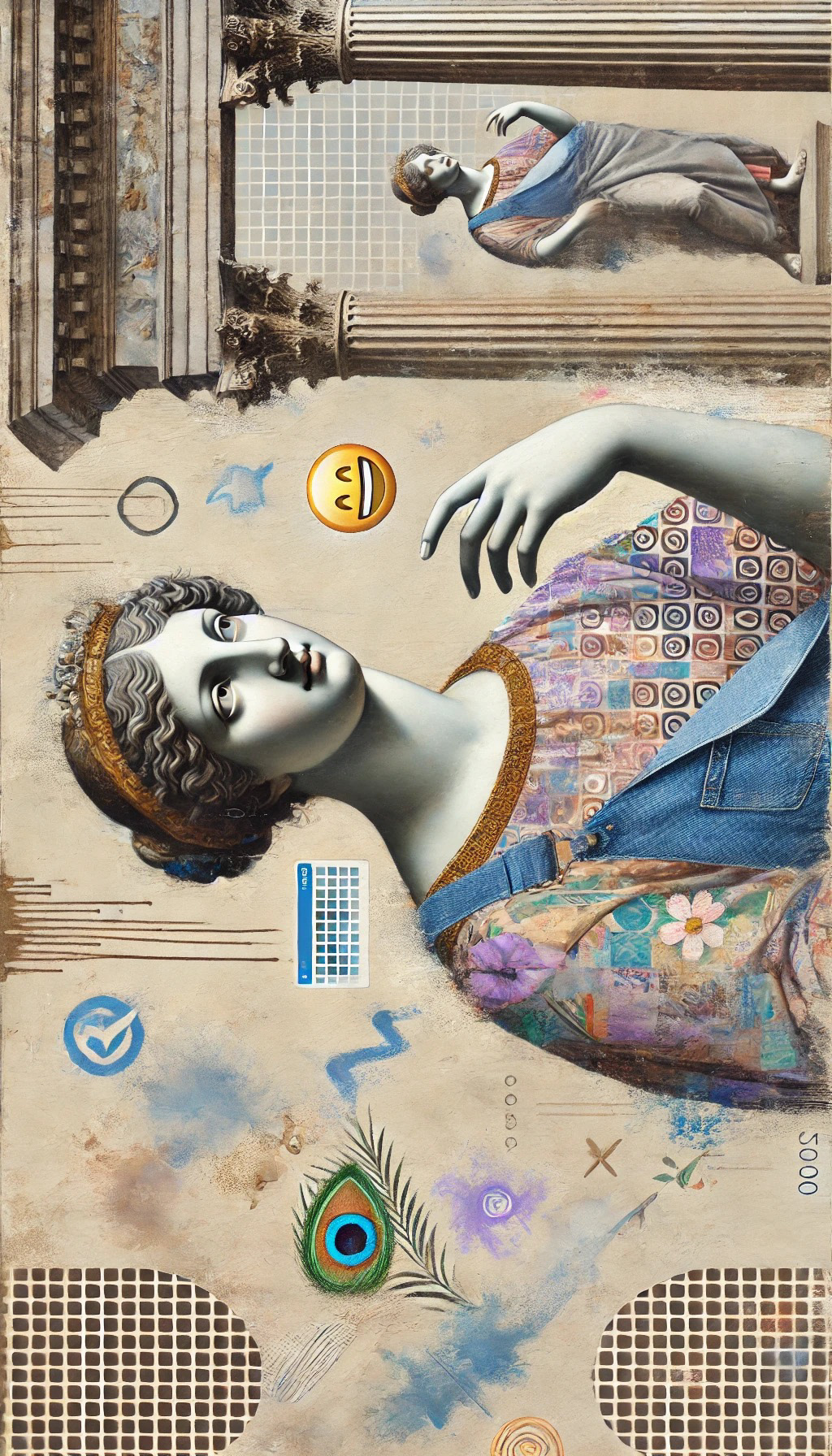

"absolutely fantastic. generate another iteration of this idea, but the artist lives in 2000"

The model responded:

"There were issues generating the image with the concept of a 2000-era artist blending classical and modern elements. The combination might have been too specific or complex for the tool to handle.

If you'd like, we can adjust the concept or simplify certain aspects to try another approach. Let me know how you'd like to proceed!"

My response:

"just try again. You got it! just remember that this is fun and not to take it too seriously. you have done a good job so far"

And the model then generated an image (it is the first on the grid of images that follows). All it needed was some encouragement, which is... odd.

The emoji is an interesting artifact in this image, but it reminds you that these models are trained on visual data online, and there are lots of photos and videos with emojis on them, so it makes sense. I also love the facade of the sweater and overalls, because on the reflected smaller figure in the background, they fade away back into stone. I love it

This is one of my favorites. I love absurdity and this inception-like broken geometry is beautiful. There is so much movement, and even a story happening in the scene.

I just love the style on this one

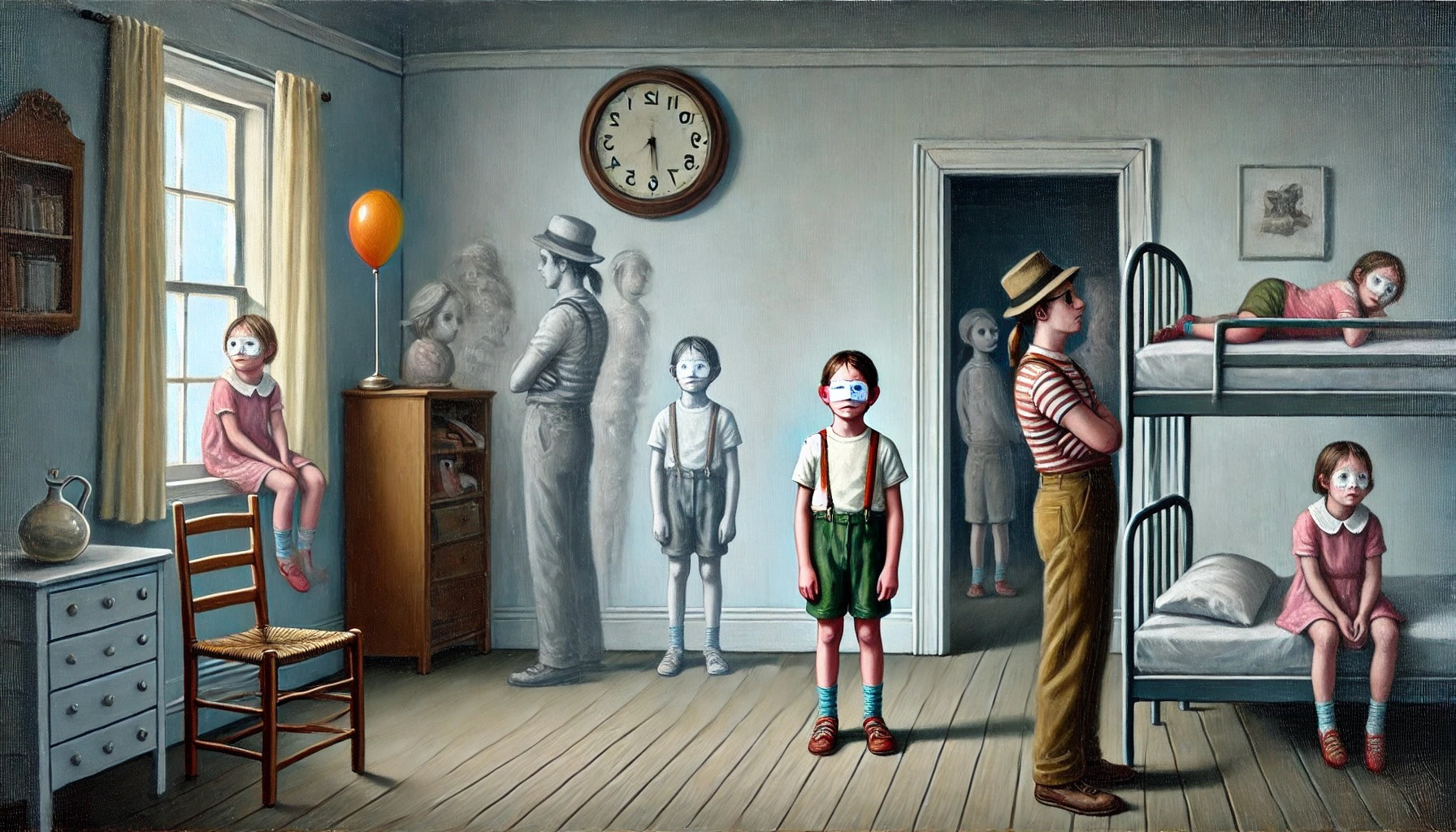

This one's prompt had something to do with the solitude of the middle child. I love the ghostly figures with strange masks, the repeated characters, and especially the clock that is in reverse. Just such a strange thing for a computer to create. I love it

This one is a lot of fun in the comparison of the two sides. the left side seems to have more mathematically driven tree structures, and is alive and green, while the other side has a more natural asymmetrical tree growth and it is dead and barren. Quite an interesting contrast.

This one feels like a dream or a depiction of a memory. There is an obvious romantic theme to it, a longing for someone or something. It is amazing how these images can convey such complex feelings.

I like the slightly uncomfortable feel of this one. It is glitchy and broken. Smaller faces and figures lurk in the background. Less absurd than some of the others but visually stimulating for sure.

I will spare you the details, but I decided to get rid of my paid subscription for ChatGPT. I mainly use Claud and Perplexity now for language based uses, and now have ComfyUI running Stable Diffusion natively on my computer. "What the hell does that mean?"

When using online image generation tools, the image is typically not generated on your local computer. Instead, the processing occurs on powerful servers owned by the company providing the service. The generated image is then transmitted back to you over the internet. ComfyUI is a system that makes it easier for a non-coder like me to run image generation models on my computer's processor. What this means is that (1) I have way more control over inputs, prompts, structure, etc. (2) there are no guard rails and (3) I don't have to pay for it.

Here is what my screen looks like when working in ComfyUI

I know it looks a bit ridiculous, but what I have here is a system that takes 2 input images which are blended together at a given ratio, then a positive and negative prompt which are ran through the Stable Diffusion XL base model, then another set of prompts which then runs everything through the SDXL refiner model. There are variables that I can tweak to affect certain aspects of the process. (If you personally use ComfyUI, you might really dislike the way I have things organized, but I like having everything that I need on the screen so I'm not scrolling around all the time.)

input 1

input 2

prompt 1: beautiful mandala of ancient energy. Said to unlock wisdom in those who look at it for long periods of time

prompt 2: "ANTIMATTER CLUSTER" A painting by the most brilliant alien master painter, which depicts a beautiful cluster of antimatter. The Artist took 2 grams of mushrooms, drank a glass of fine wine, and interacted with The God Particle while creating this piece.

Just from this example, you can probably get an idea of the unbounded potential here. First, I will share some of my favorite outputs so far, along with the positive prompt that created them. Then I will go into the more experimental stuff that I find really interesting.

Prompt 1: abstract masterpiece by sevant on acid and meth. The painting contains deep misunderstood emotions and exhibits deep absurdity. it depicts the holy one

Prompt 2: highly detailed, weird textures, peculiar painting that leaves audiences in awe for its profound implications for humanity. it blurs the lines between ideas and dreams; falsity and everything; pain and loyalty

Negative prompt: photorealistic, mundane, ordinary, simple, concrete, literal interpretation, single perspective, clear narrative, expected proportions, conventional layout, everyday objects, familiar scenes, realistic textures, normal colors, regular patterns, common symbolism, straightforward metaphors, easily recognizable figures, typical art styles, standard composition

This was one of the earlier sets of outputs that I got. I love the variety between them even though the input is the same.

Positive prompt: A painting called "sex." the conflict between love and lust, an absurdist take on surrealism, inspired by dali, and Joan Miro. this piece is confusing and peculiar to the point that gallary viewers cant take their eyes off of it. there is something beautiful and scary about this truth. the artist that painted this is on 7 grams of mushrooms and a hit from a crack pipe

Negative prompt: mundane, ordinary, simple, concrete, literal interpretation, clear narrative, expected proportions, conventional layout, everyday objects, familiar scenes, realistic textures, normal colors, regular patterns, easily recognizable figures, typical art styles

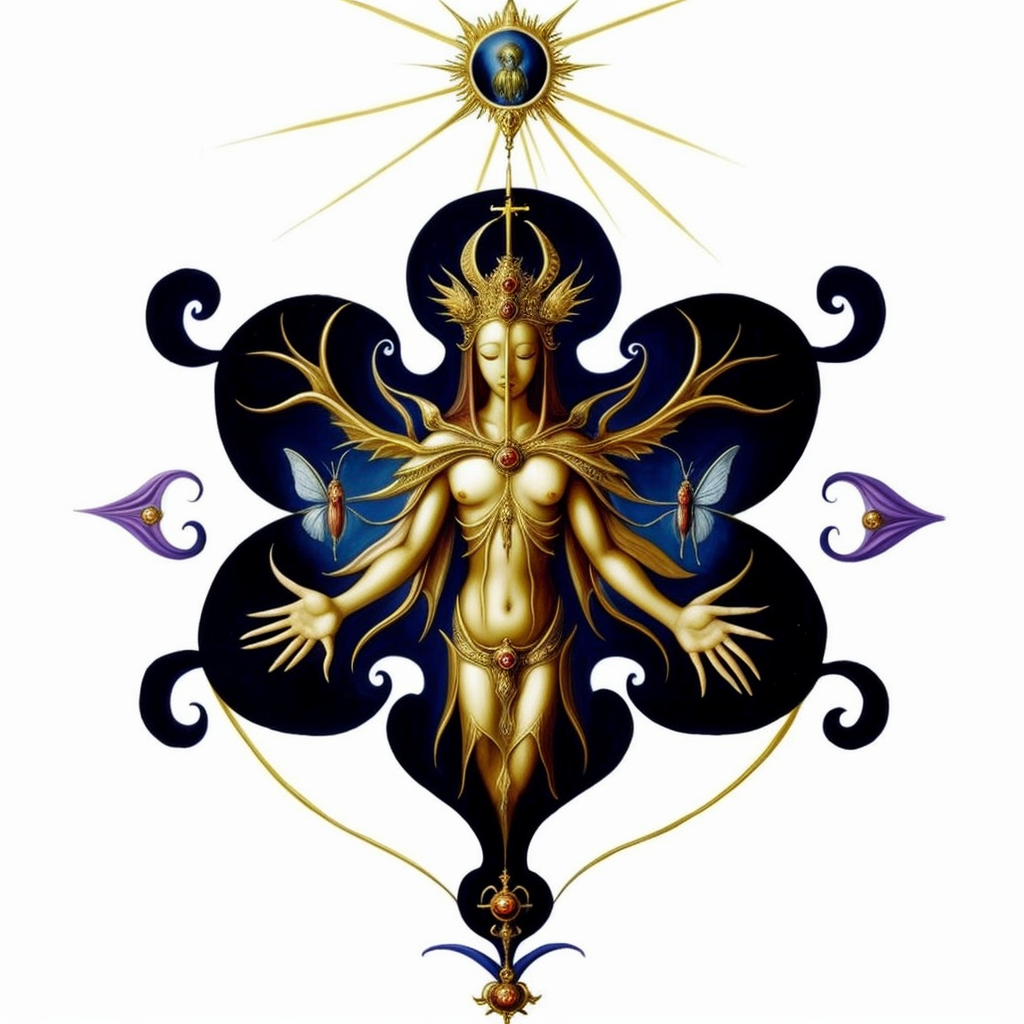

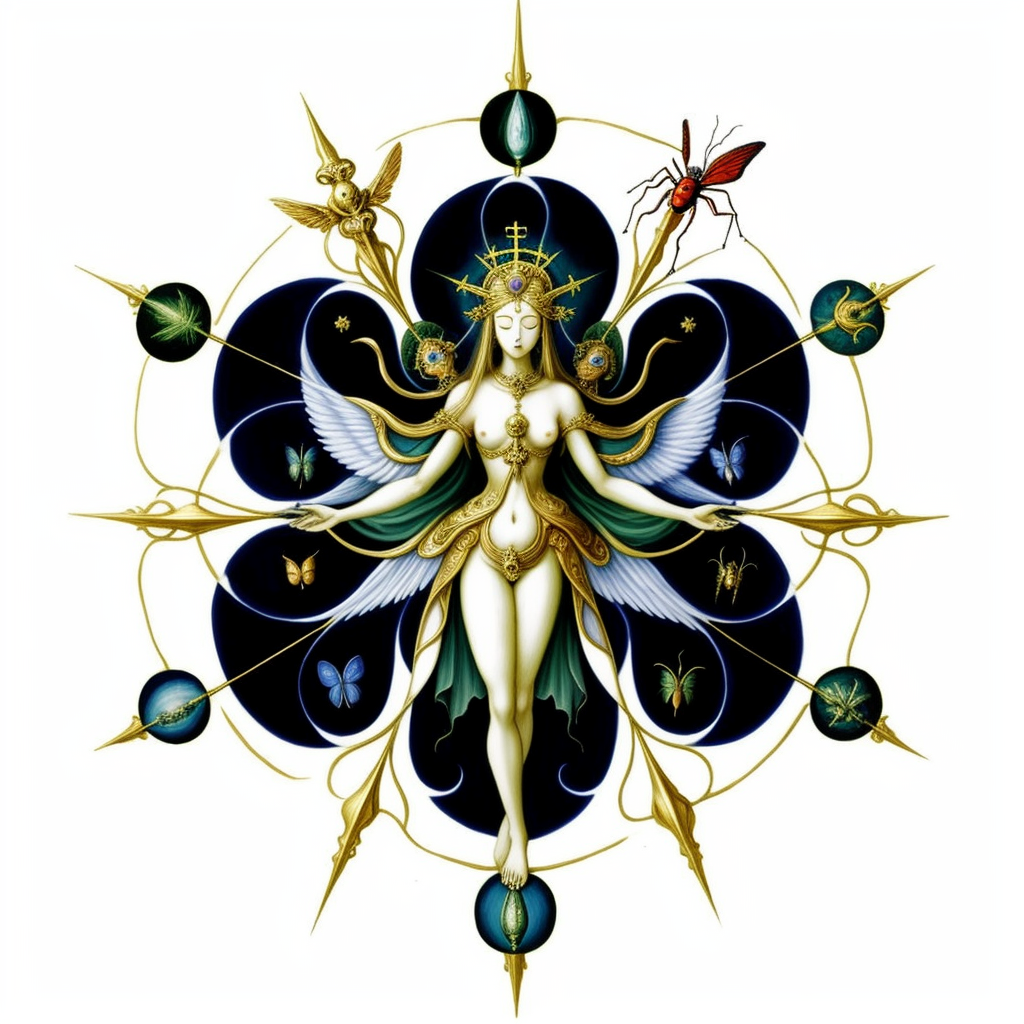

Input Image

Positive: surrealism painting by master artist from the 1500s. the painting depicts an etherial being/cloud/bug/whisper called The Holy One. The Holy One seeks piety and sacrefices. Are you worthy of her vision?

Negative: mundane, ordinary, simple, concrete, literal interpretation, clear narrative, expected proportions, conventional layout, everyday objects, familiar scenes, realistic textures, normal colors, regular patterns, easily recognizable figures, typical art styles

Positive: "genesis," surreal absurd existentialist painting by a 400 year old alien artist named Roxintus. There are cryptic enscriptions that hold great value to all civilizations that have not yet been decoded. The dream depicted was chosen out of great curation of ideas because of the immense wisdom of this artist.

Negative: mundane, ordinary, simple, concrete, literal interpretation, clear narrative, expected proportions, conventional layout, everyday objects, familiar scenes, realistic textures, normal colors, regular patterns, easily recognizable figures, typical art styles, mushrooms

SO. There are so many things to explore, and so many ways to do so. Simply put, no matter what you use as an input, as long as your computer can handle the resolution and models, you will get an output. This does make this technology dangerous, while also being incredibly important to explore.

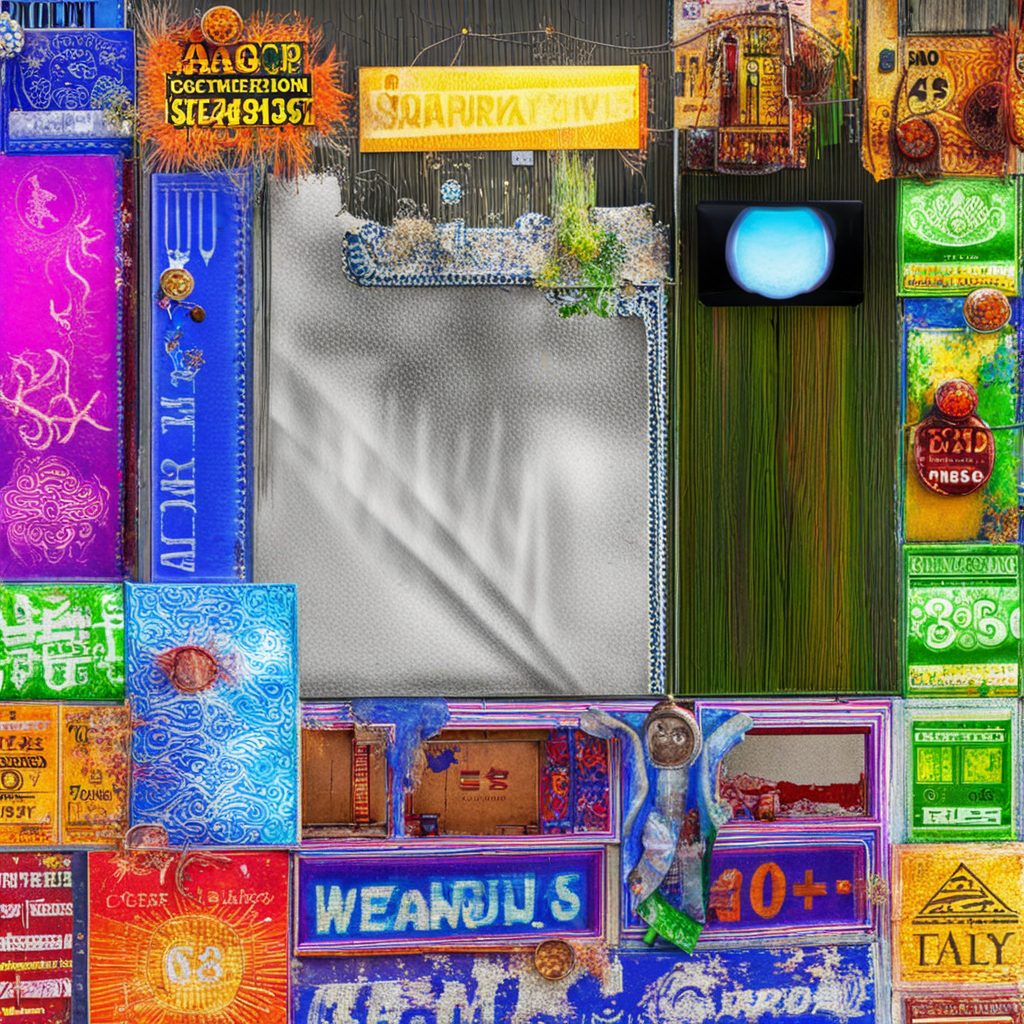

What happens when the input image is just noise generated in photoshop, and the positive prompt is meaningless numbers letters and symbols, and the negative prompt is a similar jumble of characters?

What on earth is happening here??? It seems to be a hallucination of sorts. My understanding of these models is not strong enough to confidently explain what is happening here, but I asked Claud 3.5 if it could explain:

"Pattern Recognition in Noise: The AI model is trained to find patterns and meaning in input data. When given random strings, it seems to interpret them as noise or corrupted text, leading it to generate images that appear glitchy, overlaid with text-like elements, or fragmented.

Default Biases and Training Data Influence: In the absence of clear semantic guidance, the model appears to fall back on common elements from its training data. This results in a mix of recognizable objects, text, and visual styles that are prevalent in its training set.

Visual Complexity and Layering: The images show multiple layers of information, similar to how digital interfaces or mixed media artworks might appear. This could be the model's attempt to represent the complexity and lack of clear direction in the random string inputs.

Text and Typography: There's a strong presence of text-like elements in the images, likely because the model is trying to interpret the random strings as meaningful text. This results in a mix of legible and illegible text-like patterns throughout the images.

Color Palettes and Visual Styles: The images display vibrant color schemes and diverse visual styles, ranging from digital glitch aesthetics to more photorealistic elements. This variety might reflect the model's attempt to cover a wide range of possibilities given the ambiguous input.

Emergent Themes: Despite the randomness of the input, some thematic elements seem to emerge, such as technology, media, and information overload. This could be a reflection of the model's training data and common associations with complex, text-heavy visuals.

Spatial Relationships and Composition: The images show a sense of composition and spatial relationships between elements, suggesting that the model is applying learned principles of image composition even when working with random inputs."

Thanks Claud! I am still confused.

UPDATE March 2025:

While ComfyUI has the most freedom, it can be a pain in the ass to use on Mac. Some updates rolled through and bricked the system that I was using. I am sure I can figure it out (and honestly really need to because I have a fresh mind full of ideas to play with), but chatGPT image generation is so convenient so I haven't set it back up yet. OpenAI swept the internet with Sora, a new model for image and video generation. You have definitely seen the viral "Studio Ghibli Style" used by influencers, meme machines, and the fucking White House. There is no stopping this train, but that doesn't mean we can't be upset about the way it is moving.

You see- Dall-E 2 was the most creative of the OpenAI models that I have played with. It had no set style, and was very pliable. I was constantly surprised with its depictions of my weird prompting. Dall E 3 (pre Sora) had much higher resolutions and was better at filling in details. with that being said, it began to have more of a "style" that I could notice pretty easily. It would bypass that style from time to time, but compositions often hosted the same high-contrast, smooth skin pattern. I still got a lot of wildly interesting stuff out of it, and I loved the context based generation. Turning a whole conversation was not possible before and yielded lots of interesting play.

The same is still possible with post Sora Dall E 3, but that whole style thing is blown into another dimension. It seems to have a few grooves that it likes to lock into when it comes to the look of images. Because of its accessibility and virality, it has learned a few good tricks that people like, and it mostly stays within those bounds. It overuses text with lame ass fonts and CORNY facial expressions. I can not express how much I hate this model.

Here is an example. Both of the images below had the same prompt, (Generate an image of a woman taking a selfie. The commentary of the image is supposed to be a satirical take on the age of social media and the obsession with our devices). The first was pre Sora, and the second is post Sora DALL E 3

On the left, we have satire to an absurd extent, and it reads well as a critique of the phone obsession with multiple arms, phones, and heads with insane facial expressions. It is an interesting take on the prompt, and it is fucking hilarious. The 4 legged swans were not necessary but they are loved.

On the right, I really don't have much to say. This comparison makes me so mad. This new model also plays on other conversations too much. Each ChatGPT chat has access to all of the other chats you have had in the past, so when I generate images with it now, it plays within the same space. For most people this is probably cool, but for me it is just this singularity-esque smoothing out of ideas that reminds me that we have put a lot of faith in the motives of Sam Altman and his cronies.

Writing this reminded me that I want to get back on ComfyUI so that will be coming soon.